By: Bart Baesens, Seppe vanden Broucke

This QA first appeared in Data Science Briefings, the DataMiningApps newsletter as a “Free Tweet Consulting Experience” — where we answer a data science or analytics question of 140 characters maximum. Also want to submit your question? Just Tweet us @DataMiningApps. Want to remain anonymous? Then send us a direct message and we’ll keep all your details private. Subscribe now for free if you want to be the first to receive our articles and stay up to data on data science news, or follow us @DataMiningApps.

You asked: I heard that it is possible to extract decision trees from a neural network. Can you tell me how this can be done?

Our answer:

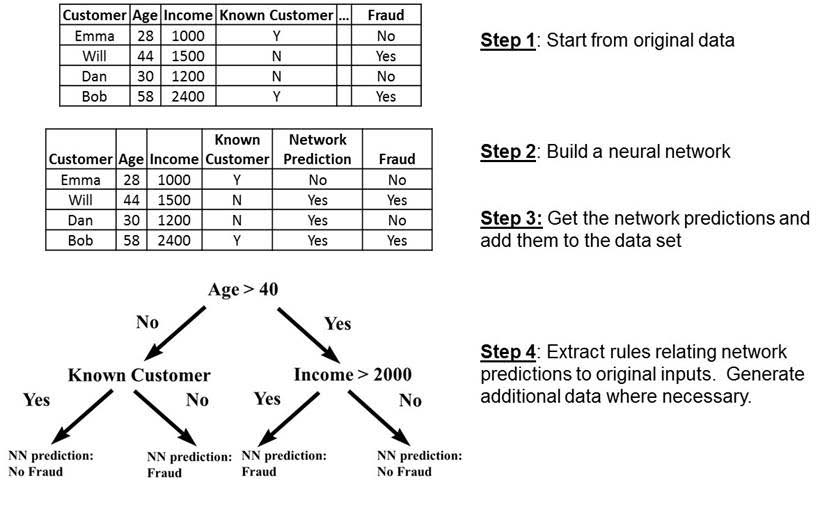

The simplest approach is by considering the neural network as a black box and using the neural network predictions as input to a decision tree algorithm (e.g. See5, CART, CHAID) as illustrated in the figure below:

|

In this approach, the training data set can be further augmented with artificial data, which is then labeled (e.g. classified or predicted) by the neural network, so as to further increase the number of observations to make the splitting decisions when building the decision tree. Note that since this approach does not make use of the parameters or internal model representation, it can essentially be used with any underlying algorithm, such as SVMs, k-nearest neighbor, etc. Naturally, the decision tree serves to enhance to explainability and interpretability of the black-box model; the latter can still be used to generate the actual predictions themselves.