By: Bart Baesens, Seppe vanden Broucke

This QA first appeared in Data Science Briefings, the DataMiningApps newsletter. Also want to submit your question? Just Tweet us @DataMiningApps. Want to remain anonymous? Then send us a direct message and we’ll keep all your details private. Subscribe now for free if you want to be the first to receive our articles and stay up to data on data science news, or follow us @DataMiningApps.

You asked: What is meant by denormalizing data for analytics?

Our answer:

The application of analytics typically requires or presumes the data will be presented in a single table containing and representing all the data in a structured way. A structured data table enables straightforward processing and analysis. Typically, the rows of a data table represent the basic entities to which the analysis applies (e.g. customers, transactions, firms, claims, cases, etc.). The rows are also called instances, observations, or lines. The columns in the data table contain information about the basic entities. Plenty of synonyms are used to denote the columns of the data table, such as (explanatory) variables, fields, characteristics, indicators, features, etc.

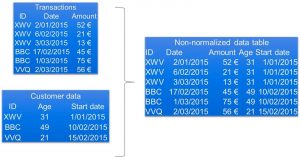

Denormalization refers to the merging of several normalized source data tables into an aggregated, denormalized data table. Merging tables involves selecting information from different tables related to an individual entity, and copying it to the aggregated data table. The individual entity can be recognized and selected in these tables by making use of (primary) keys, which have been included in the table to allow identifying and relating observations from different source tables pertaining to the same entity.

This figure illustrates merging two tables (i.e., transaction data and customer data) into a single denormalized data table by using the key attribute type ID that connects observations in the transactions table with observations in the customer table. The same approach can be followed to merge as many tables as required, but the more tables are merged, the more duplicate data might be included in the resulting table, due to the denormalization. It is crucial that no errors are introduced during this process, so checks should be applied to control the resulting table and to make sure that all information is correctly integrated.